Dog Breed Identification Using Transfer Learning

Try predicting your dog here!!!

Facinated by the power of deep learning models on image recognition and also due to my love for dogs, I started experimenting with building models on Stanford dogs dataset. It has 20,580 images on 120 dog breeds.

Lots of people have tried their own approaches in classifying dogs using this dataset, these include using facial features, localizing dog figures with YOLO algorithm, and so many more. Khosla et.al pointed out various challenges on this task:

- Small inter-class variations – different breeds could have the same facial features but different colors and vise versa

- Large intra-class variations – the same breed could have different poses and even colors

- A great amount of background variations – photos taken in natural scenes or indoors with or without humans

Deep learning computer vision models such as Resnet, Alexnet, Vgg, Squeezenet, Densenet, Inception have advanced so much in recent years. Here is a nice post to summarize some of them. In this blog post, I’m going to show results of the comparison using Stanford dogs dataset.

There are two types of transfer learning using pretrained models, finetuning – which updates all of the model’s parameters (parameters of all layers) on a pretrained model, and feature extraction – which only updates the parameters in the final output layer. I froze the weight of all layers except for the output layer when training the models given the dog breeds dataset comes from ImageNet, which is larger and more or less similar.

Given that the number of available pictures varies for each breed (see below, ranging from 148 to 252), I used 140 pictures for all 120 breeds (in total 16,800 images) and randomly selected 112 (80% of 140) pics as train and 28 (20% of 140) pics as the validation set. I was initially worried about having more pictures in certain breeds would lead to bias, however, with data augmentation such as cropping, rotating, and changing light conditions, this would not be too much of a problem.

$ for breed_folder in `ls -A`; do echo -e "$breed_folder\t`ls $breed_folder |wc -l`";done |sort -k 2,2

| Breed | Number of pictures |

|---|---|

| redbone | 148 |

| Pekinese | 149 |

| Golden retriever | 150 |

| … | … |

| Afghan hound | 239 |

| Maltese dog | 252 |

Input pictures

Let’s take a look at what the pictures are like: shown 4 randomly selected images, the true label of each image is shown on top in the same order.

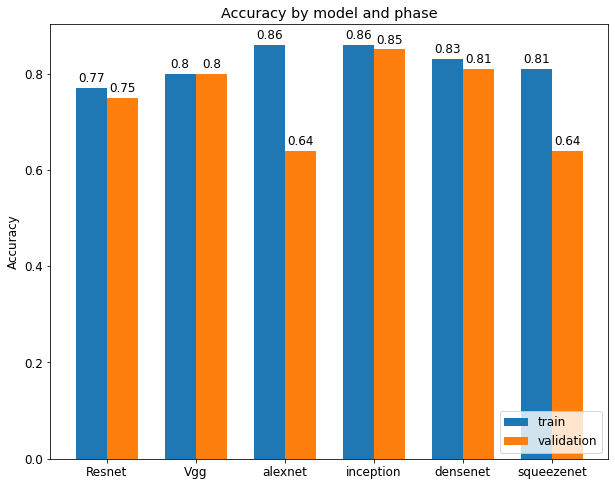

Performance overview

The corresponding performances on six different deep learning models are shown below. Except for Squeezenet, which I trained for 50 epochs, the rest of the models were only trained for 30 epochs (each took around 35min clock time using GPU). Although the architectures are different among these models, universally they were trained using the same stochastic gradient descent optimizer (learning rate = 0.0001, momentum=0.9). Alexnet and Squeeznet are overfitted, Vgg, Inception, and Densenet all have good and comparable accuracy on both train and validation set (accuracy >=0.8). All models’ performances could be improved with additional tuning, this will be the next step.

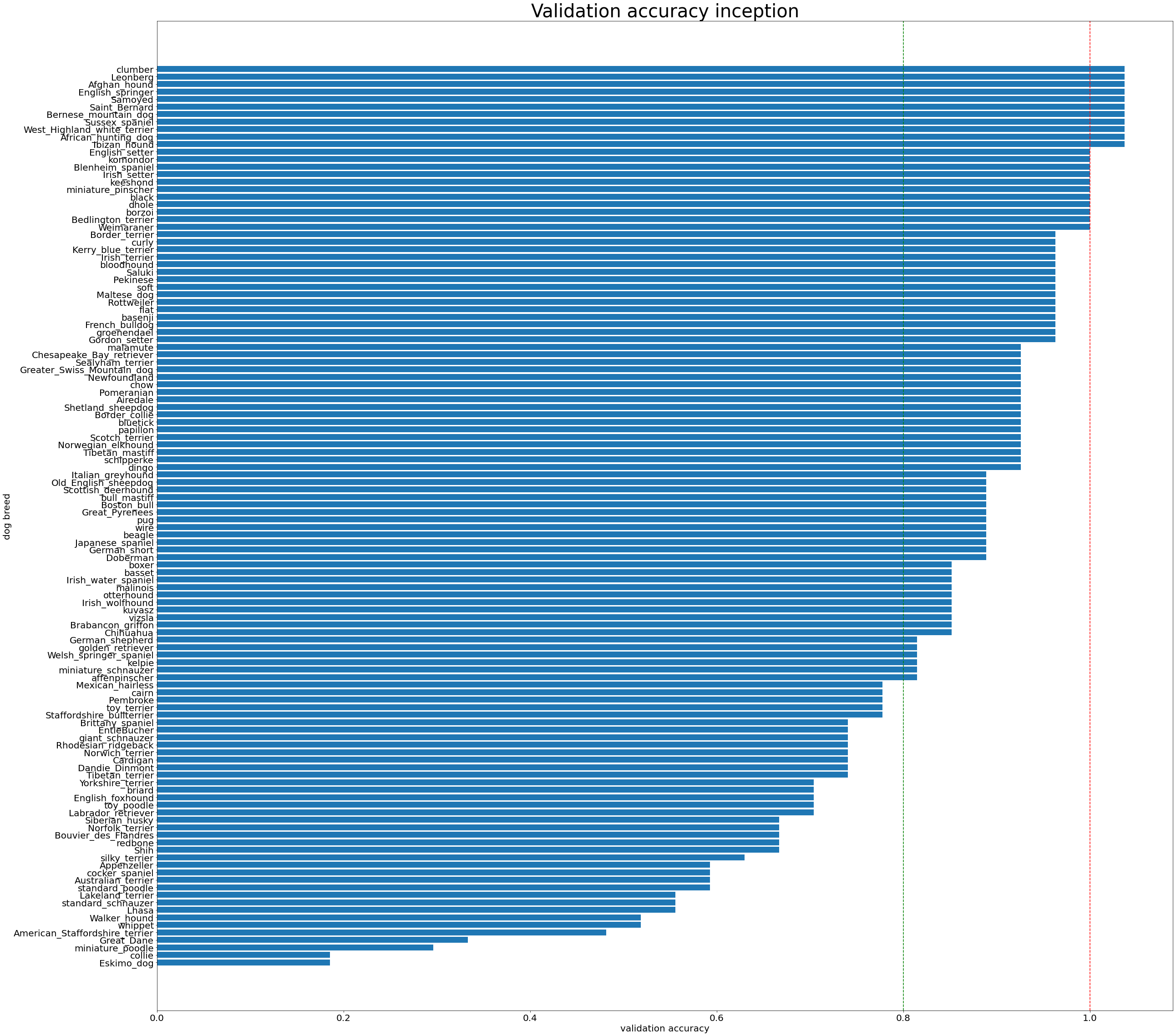

Below is a table that listed the number of breeds that were correctly predicted on the validation set. The Inception model shows the most promising accuracy on both train and validation set, we will focus on that for now.

| model name | number of breeds with accuracy >0.8 |

|---|---|

| inception | 91 |

| densenet | 82 |

| vgg | 72 |

| resnet | 65 |

| alexnet | 24 |

| squeezenet | 24 |

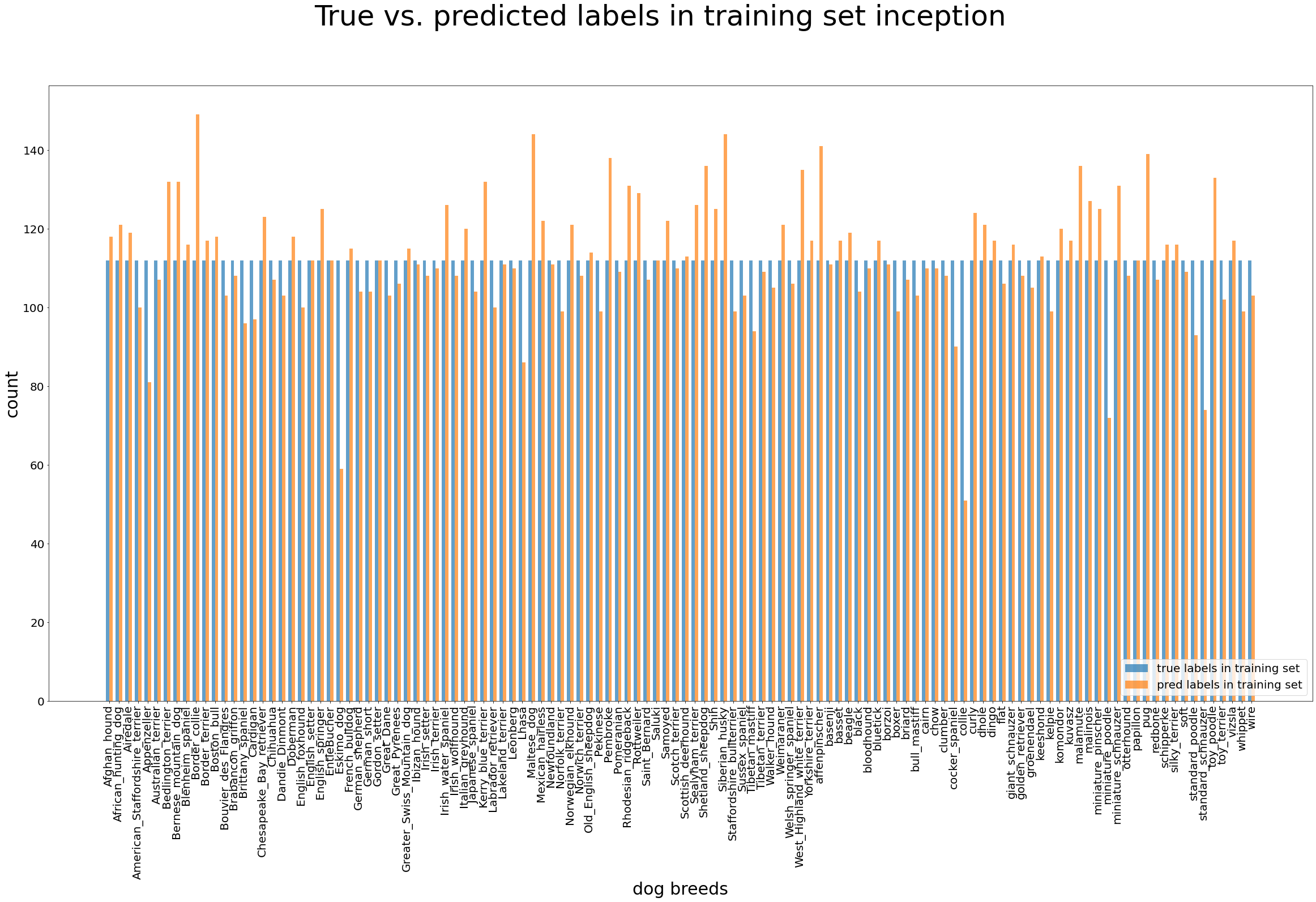

Inception: Number of true labels vs predicted labels in train set

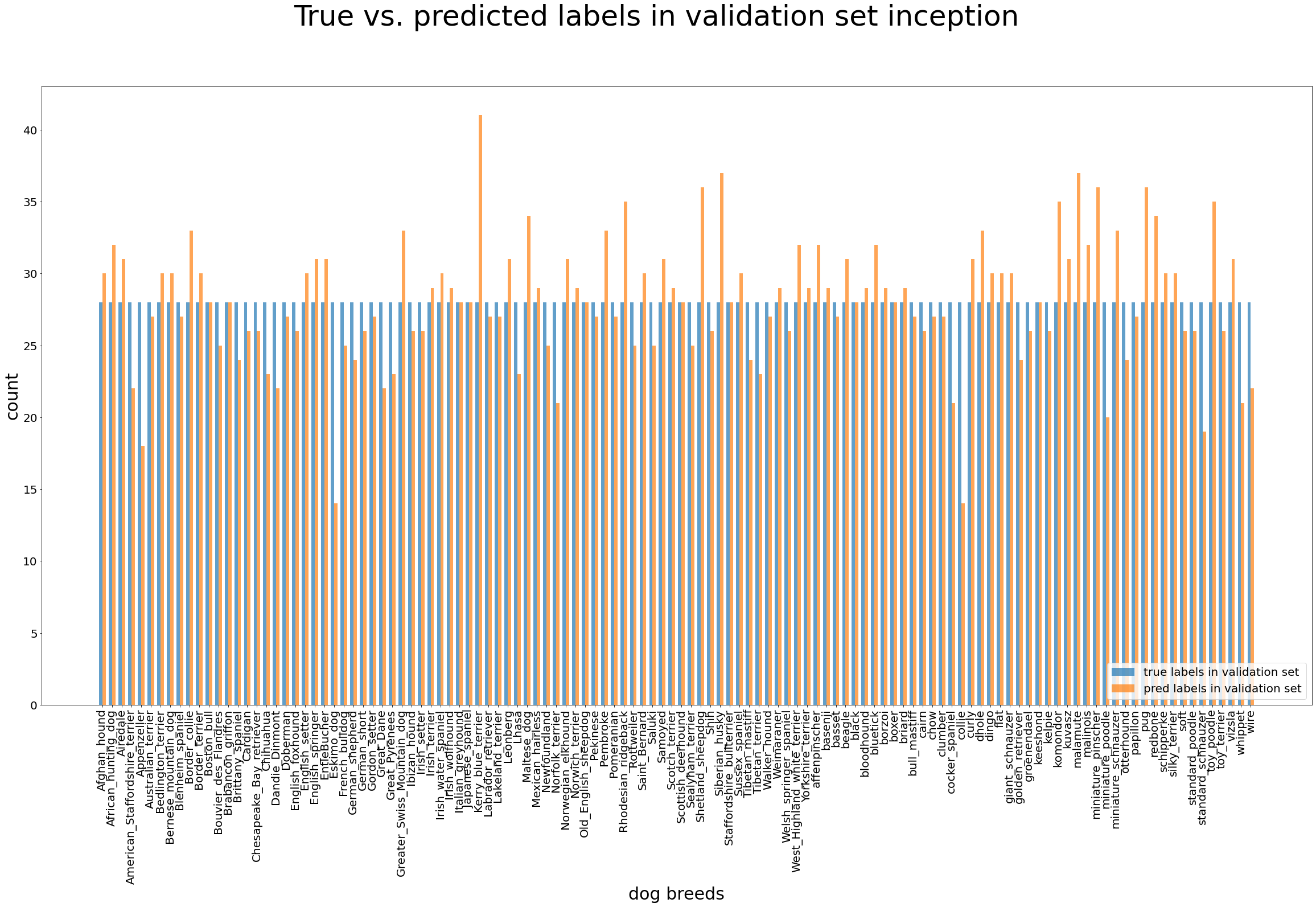

Inception: Number of true labels vs predicted labels in validation set

Inception: Classification accuracy on each breed. Red dashed line showed accuracy of 1.0 while blue dashed line showed accuracy cutoff of 0.8.

Try a random image by yourself

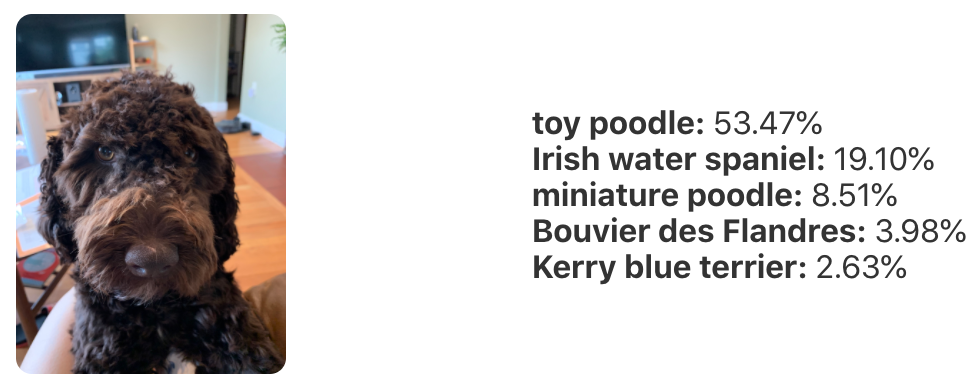

I tried predicting my dog Rosie, who is an 11-month old labradoodle puppy. Note that this breed was not in the Stanford dogs dataset, therefore, the model will predict her to be a mixture of one or more similar breeds from the Stanford dogs dataset..

Here is what I’ve got in return, it’s true that labradoodle has a mixture of both poodle and Irish water spaniel!

Feel free to play it yourself at https://dog.jiayiwu.me/, the web interface was built by my smart husband!